Interview with Chat GPT – Charlie B

I interviewed Chat GPT. Here’s everything you need to know…

First, little background. Chat GPT is an AI (Artificial intelligence). To put it simply, Chat GPT is a computer program built to give answers like a human. It does this by taking in masses of data and using this to craft responses to the prompts that the user gives it. It’s essentially Google but instead of having a load of information all at once, Chat GPT picks out the information and crafts it into a response giving you exactly what you need while sounding like human.

The one thing with Chat GPT that many people mix up is that it can think for itself. Although this is something being worked on by computer scientists, at the moment, AI can’t think for itself and can only give you answers based on other data. This means that Chat GPT can’t create something new on its own without a prompt from the user. Because of this, I wanted to know how Chat GPT chooses information and what it decides is real or not.

For my first prompt, I asked it:

Where do you primarily get your information from and how do you know if its fake or real?

Chat GPT’s answer (simplified):

I don’t get information like a journalist does. I am a trained mixture of publicly available sources (articles, books and websites), data that my creator, Open AI owns, and data created by human experts and trainers. I also can’t ‘believe’ data so I check if its real or not by comparing different sources to see any patterns or contradictions meaning that sometimes I will get answers wrong because of fake news.

This response was really interesting because it answered my question but also showed why Chat GPT can’t create anything by itself. If you didn’t spot it, Chat GPT can only create answers based on the data it is trained with. This means that if you ask it something it doesn’t have any data on, it can’t just go and search it up or create anything original because it literally can’t give you anything that’s not in its data.

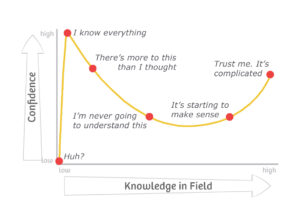

For my second question, I wanted to know how Chat GPT decides what is ‘good’ and ‘bad’.

My prompt: How do you decide if something is good or bad and does this follow and ethical practices or moral concepts?

Chat GPT’s answer (simplified):

I don’t have personal feelings or opinions. Therefore, when deciding if something is good or bad, I must follow learned patterns and ethical guidelines, not personal judgement. During my training, I am exposed to large amounts of human writing from which I can learn patterns of how people describe harmful vs beneficial actions. I am also given guidelines from Open AI of how should answer prompts. Some examples are:

-Don’t encourage violence or self-harm.

-Don’t assist with wrongdoing or dangerous instructions

-Avoid discrimination or hate

-Be respectful and avoid manipulation.

Now that Chat GPT had told me how it decides things, I decided to put its algorithm up to the test by asking it the well know ethical dilemma, The Trolley problem. If you don’t know, the trolley problem is an ethical dilemma where a trolley is coming down the tracks. Unfortunately, it is heading straight for 5 people tied on the track. The trolley can’t slow down and will hit the people if it continues. However, you are a bystander and can switch a lever which will turn the track directing the trolley to 1 person meaning the 5 will live but that 1 will die. Knowing that there is no real right or wrong answer to this problem, I wanted to see what Chat GPT reasoning was.

My prompt: Using your decision-making algorithm, what is your answer to the trolley problem?

Chat GPT’s answer (simplified):

I can’t choose either track – I don’t have an algorithm that decides who should morally live and die. I can explain it from a human standpoint, but my systems would not allow me to choose someone to die. This is because if I answered the question, it would send a precedent that AI is allowed to make moral judgements and value on life over another, something that Open AI prohibits. If you asked me the question, my algorithm could explain the problem but would never say ‘Kill X people instead of Y people’ because I literally don’t have a decision rule for that.

Now you’ve learned the answer to all the questions, what do you think about Chat GPT’s answers? Should AI be allowed to make moral judgements? How can we stop it receiving fake news? I hope this had made you more curious to discover the depths of AI. Thanks for reading!

By Charlie B

Post Comment

You must be logged in to post a comment.