Could we, should we create A.I? – Sophie Atkinson

I am guessing you will have seen one of the following films.The matrix, I robot, Lucy, Her, transcendence. All of these films focus on a topic which is rapidly becoming more than just mere science fiction, artificial intelligence is one of the most controversial and rapidly developing areas of science, to cover it fully in a seven minute speech is quite a feat so we are going to break it down into two questions. Could we and should we create A.I?

Let’s look at the could first, I am going to talk about three things here. What even is A.I? How can we test if something is truly intelligent? And how do we get from where we are now to creating sentient computers?

So right from the beginning what is A.I, well I’ll bet most of you have one form sat in your pocket, Siri, invented in 2011 is a form of artificial intelligence. Driverless cars developed by Google are too. But today we are talking about future A.I which is formally called strong A.I. This means unlike Siri it is not narrowed in function by rigid algorithms, instead of outperforming humans in one task like chess, the A.I I am talking about here could learn to outperform humans in every cognitive task. However let me make clear from the start I am not talking about robots who can feel, that is a whole other kettle of fish, and one we shall not get into today.

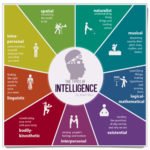

So there have been countless attempts since the field was created in the 1950’s to produce a system smarter than a human, but how can we separate the systems which carry out seemingly intelligent tasks to those who are actually on par or surpass human intellect? There are countless tests but I only want to mention my favourite one.

My personal favourite is the coffee test, created by Mike Wozniak. In this test a robot has to enter a persons house, and make a coffee. Sounds simple enough…but they have to find the machine, locate the coffee, milk and sugar then boil the water and lastly press the right button for the machine. If a robot can do all of this, learn how to recognise the elements, how to combine them and do so in a human environment then I would argue it is on Par with any human who needs caffeine in the morning to survive. Unfortunately no robot has passed this test yet.

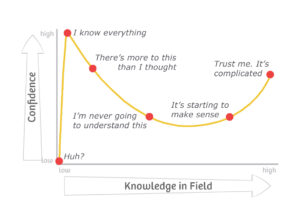

Lastly for could, how do we get from Siri and Cortana to strong A.I, self aware systems? The answer is with much difficulty. There are so many factors economic, social and environmental which could hamper the progress of A.I. But there are also so many avenues in which we could potentially create a sentient computer. From software development to brain emulation we have too many ways of making A.I for me to talk about today. Ray Kurzweil predicts A.I could be possible by 2045. That means at the age of 46 for most of us we could see the creation of true artificial intelligence. But let’s not get ahead of ourselves, almost all experts in the computer and A.I field disagree on the time scale for A.I, some think it is decades away, others centuries. But one thing that is unanimous is that it will happen and is anything but impossible.

Now for the second question and I think the key one here. If we suddenly had the ability to create super intelligent computers…should we? Steven Hawkins predicts A.I will be the downfall of mankind but others like Eric Horvits say it will aid society as a whole. We can never be sure about something until it happens but for now let’s consider two possibilities. The dangers of A.I and the benefits it could bring.

Let’s begin with the dangers. Now when you think dangerous AI most people jump straight to killer robots that have taken over the world as in the matrix or the terminator but really the danger of robots gaining their own will and thirst for destruction is minimal. There are really two realistic dangers with A.I.

The first is that the A.I is programmed to do something dangerous. A gun sat on the ground is harmless but it is when a soldier commanded by a dictator picks it up and fires it that it becomes a threat. It is the same with artificial intelligence. Autonomous weapons are sister designed to kill, if these fell into the wrong hands, or some may argue if these were successfully developed at all they would cause mass casualties. As with any new technology we need to be developing it for benevolent and the right reasons, not for destruction or gain of power.

The second issue is that the A.I is programmed to do something beneficial but develops a destructive method for achieving its goal. This can happen whenever we fail to fully align the AI’s goals with ours. If you ask an obedient intelligent car to take you to the airport as fast as possible, it might get you there chased by helicopters and covered in vomit, doing not what you wanted but literally what you asked for. If a super-intelligent system is tasked with a ambitious geoengineering project, it might wreak havoc with our ecosystem as a side effect.

Now on a lighter note let’s look at the good stuff, one of the best things about artificial intelligence is the massive computing and processing power it has. Ask a person to sift through thousands of weather patterns, or traffic announcements or police reports and it would take an age. Ask an intelligent computer system and it can help you to predict the next natural disaster, come up with infrastructure to allow driverless cars to operate on a connected system to reduce crashes or like in robo-cop how to improve crime rates. The point is a computer can look past all of the socio-political junk and get the root of our key issues today. We could solve world hunger, the energy crisis and so on all with the help of A.I.

On a more selfish tack one specific use I would love to see develop is an A.I personal assistant. One of the hardest things we are trying to crack is for computers to understand natural speech patterns. If they could we could have a full conversation with an assistant about what restaurant to book for dinner or have the assistant be able to predict our usual behavioural patterns to remind us about our favourite show or if we have a meeting.

So we’ve looked at the good and the bad. I think the key message to take away is that A.I is certainly possible in the future, we cannot know exactly when but it will happen as humans strive to improve beyond our own boundaries of intellect. However like any technology, when we have it is is who is using it and how we use it which will define whether it is our greatest achievement or our ultimate failure.

Post Comment

You must be logged in to post a comment.